Input Output

![]()

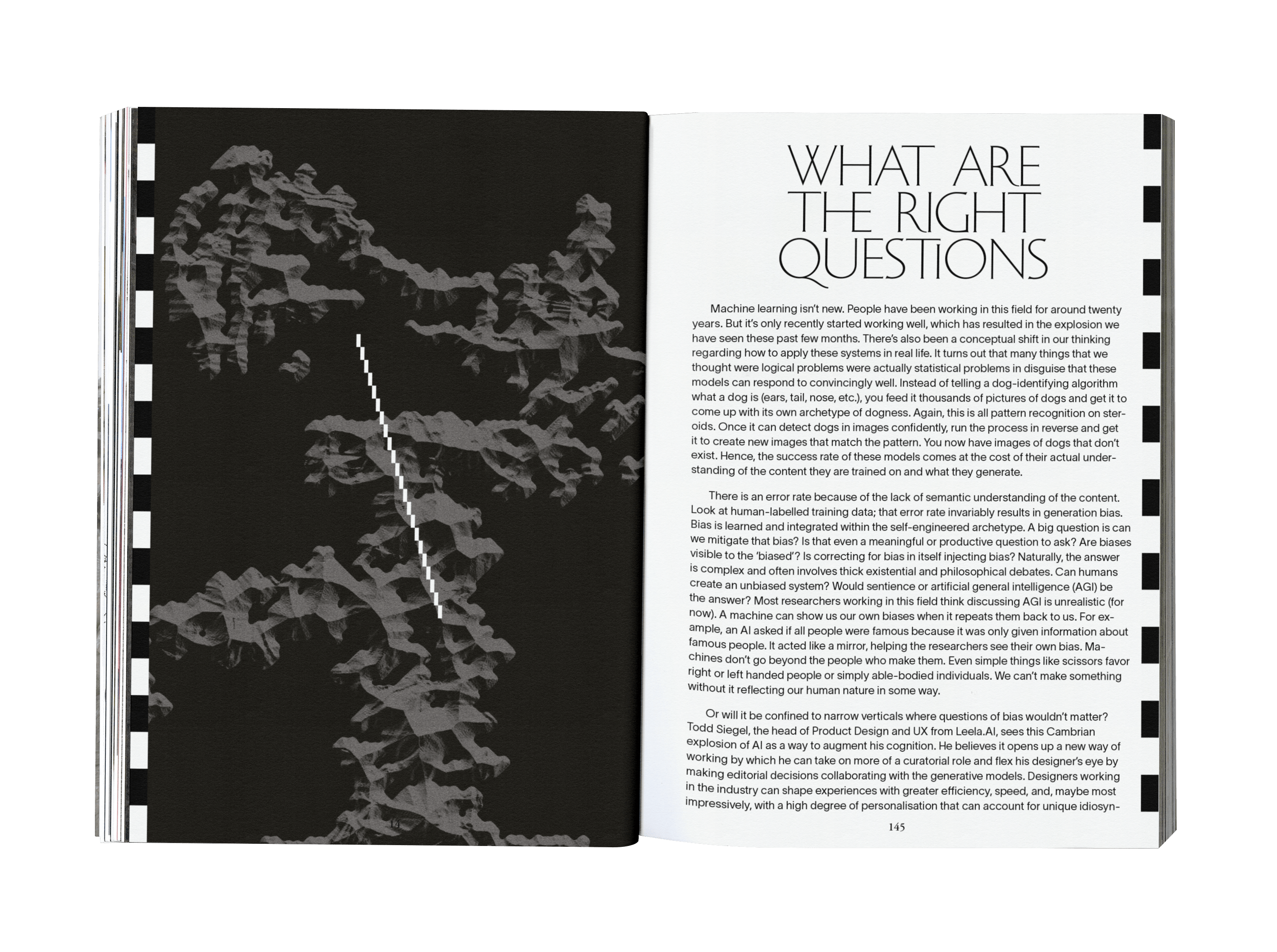

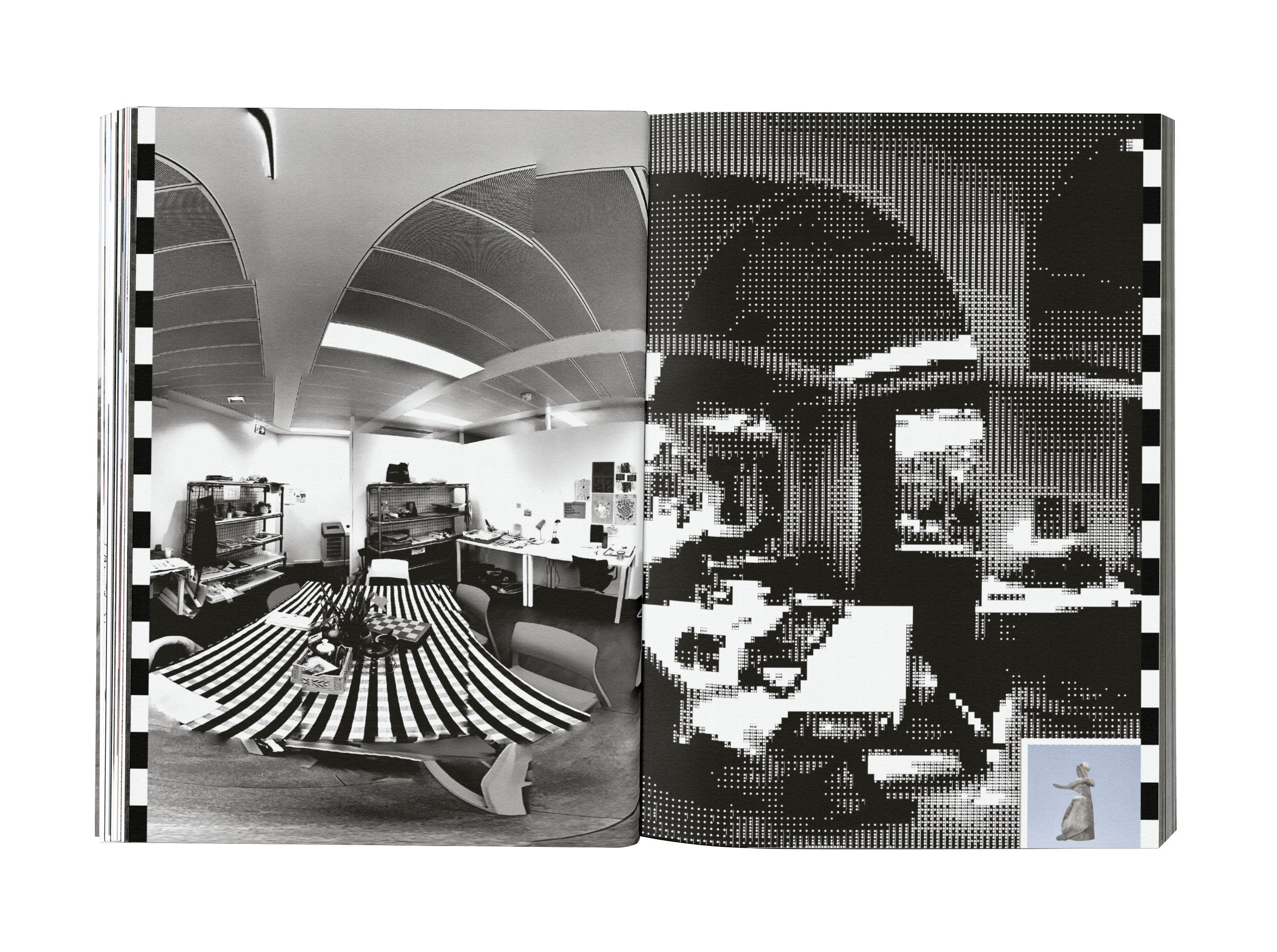

Leveraging the growing accessibility of generative machine learning, my focus was to unveil its potential biases. Using these models, I created text descriptions for images linked to my Indian cultural heritage and subsequently fed these prompts into image generators. The outputs were a deviation from the originals, often amplifying stereotypes or shifting towards a Westernized hybrid. These biases, while sometimes overt, are frequently subtle and potentially harmful to under-represented and marginalized groups.

Echoing Hito Steyerl’s concept of ‘poor images’, these distortions depict a semantic loss. I’ve collated these experiments into a book, serving as both a resource and historical documentation. It scrutinizes these systems, their implications, and similar disruptions in the creative sector’s history, translating digital discourse into a tangible medium.

Echoing Hito Steyerl’s concept of ‘poor images’, these distortions depict a semantic loss. I’ve collated these experiments into a book, serving as both a resource and historical documentation. It scrutinizes these systems, their implications, and similar disruptions in the creative sector’s history, translating digital discourse into a tangible medium.